A private WeChat group, circulated among workers from Zhangjiang, Shanghai, has recently been a subject of intrigue. Membership requires being an employee of a domestic chip company. In this group, even competitors exchange information and business resources. This “support group” acts as a ceasefire zone where industry peers can temporarily halt their competition and assist each other, united by a common opponent: Nvidia.

Due to Nvidia’s dominance, the sales teams of Chinese chip companies often face humiliating challenges.

A salesperson for a Chinese GPU company, who spoke under the pseudonym Li Ming, recounted his experiences during the AI boom. Confident initially, he soon realized that clients bombarded him with questions about how his company’s products compared to Nvidia’s A100 chip and if there were comparable solutions to Nvidia’s NVLink. Despite leveraging connections and influential lobbying, clients consistently preferred Nvidia.

The Nvidia A100, with 54 billion transistors packed into 826 square millimeters, is key to unlocking AI large models.

Using Nvidia’s chips is akin to having millions of people with an IQ of 200 doing calculations, compared to thousands with an IQ of 100 using other chips. Top tech companies are frantically acquiring Nvidia chips. The more high-end Nvidia GPUs a company owns, the better their chances of training smarter models.

According to 36Kr, OpenAI currently holds the largest number of high-end Nvidia GPUs globally, with at least 50,000 units. Google and Meta also possess clusters of around 26,000 units each. In China, only ByteDance, with about 13,000 units, matches this scale.

Nvidia monopolizes the best resources in the global supply chain—having been allocated most of the advanced chip production capacity from TSMC and attracting the largest group of engineering users worldwide, thus holding the lifeline of many AI companies.

Absolute monopoly often breeds discontent, anger, and a desire to break free.

“Today, everyone involved in large model training is losing money, except Nvidia,” said an industry insider indignantly. “Nvidia’s profit margins are causing severe discomfort and harming the AI industry.”

Financial reports show Nvidia’s gross margin is 71%, with the popular A100 and H100 series boasting a gross margin of 90%. As a hardware company, Nvidia enjoys higher profit margins than most internet software companies.

The exorbitant prices are driving Nvidia’s major customers away. On July 30, Apple announced it would use 8,000 Google tensor processing units (TPUs) for AI model training, with none from Nvidia. The news led to a 7% drop in Nvidia’s stock price on July 31, wiping out USD 193 billion in market value—almost equivalent to the entire market cap of Pinduoduo.

For Chinese GPU companies looking to take a piece of Nvidia’s market, 2022 was a turning point. With multiple US bans, Nvidia, struggling to survive, continued to release cutdown versions of its chips in China, only to have them quickly banned:

- In September 2022, exports of A100 and H100 chips to China were banned, leading Nvidia to release the cutdown A800 and H800 versions.

- In October 2023, Nvidia’s exports of the A800, H800, L40, L40S, and RTX 4090 chips to China were banned.

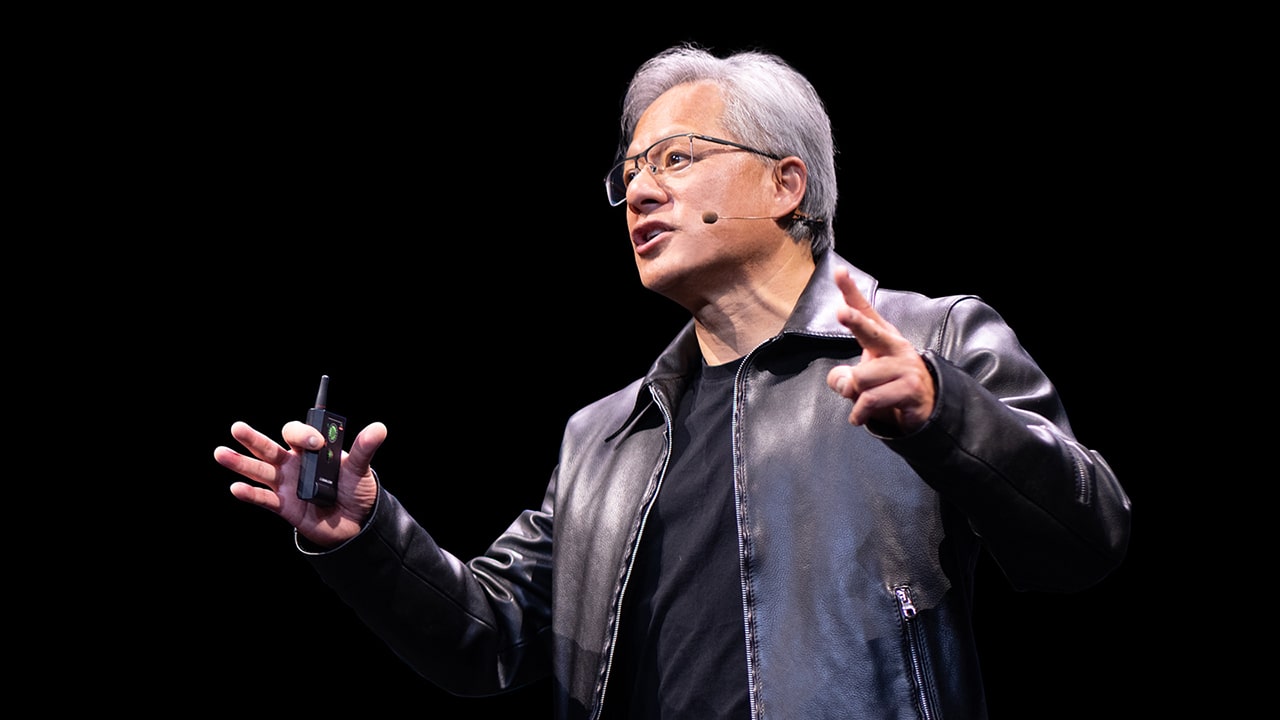

- In June 2024, Nvidia’s founder Jensen Huang announced the release of cutdown L20 and H20 chips for China.

However, these cutdown versions sparked more intense industry backlash. The H20, which Nvidia is about to release, costs half of the H100 but only has one-third of its performance. An industry insider angrily criticized it as “pure exploitation.”

As Nvidia’s customers grew dissatisfied and angry, Chinese chip companies aiming to replace Nvidia found opportunities in this sentiment.

Previously, they could only scrape together crumbs behind Nvidia. Semiconductor analysis firm TechInsights reported that, in 2023, Nvidia held a 98% market share in data center GPU shipments, with other chip companies barely taking 2%.

Now, with the bans, Nvidia’s perfect market hold in China has a crack. Who can replace Nvidia? Domestic AI chip companies see a chance.

“This year, Nvidia’s 90% market share in China has been released. Whoever can grab it, grabs it,” said the founder of a domestic GPU company.

In 2021, 36Kr published a report detailing how competitors eyed Contemporary Amperex Technology (CATL) in the power battery industry. Now, Nvidia, dominant in AI chips, is seen as a thorn in the side of many peers.

However, Nvidia’s barriers are higher, and the gaps with its competitors are larger. Domestic GPU and AI chip manufacturers, though weaker, understand the Chinese market better and have more localized strategies. Meanwhile, established chip giants like Intel and AMD have more resources to confront Nvidia directly.

In the short term, Nvidia won’t be defeated, but it won’t be unscathed either.

Breaking through

To break through, one must find the opponent’s weakness. One of Nvidia’s weaknesses is arrogance.

The chip industry is essentially a B2B software industry. Customers need a sense of “companionship” from chip manufacturers, such as hardware debugging, software, and hardware compatibility, and thorough support to ensure customer stickiness and make the chip products hard to replace.

However, industry insiders told 36Kr that, in the Chinese market, except for large buyers like Alibaba, Baidu, Tencent, and ByteDance, most companies, even with big-ticket transactions, rarely receive after-sales service from Nvidia.

When Chinese engineers using Nvidia’s chips have questions, they often have to search the company’s website or look toward the community for answers.

When working with Nvidia, Chinese customers’ various needs are often unmet. A chip industry insider told 36Kr that Nvidia usually pushes the most sophisticated and expensive solutions in China. Yet, customer requests for customized solutions are generally denied. After purchasing the cards, customers have to find their own solutions or seek out an algorithm company to help them solve their problems.

This approach has accumulated many complaints from small and medium enterprise customers. “Nvidia, now a big company, no longer values small customers as it did in the past. Its products have no challengers, so they don’t need to please customers,” the insider said.

But in the past, Nvidia’s rise highlighted the importance of service in the chip industry. In 2006, when the CUDA ecosystem was starting, Nvidia’s products were unimpressive. Nvidia’s team began with university research teams and gradually penetrated each sector, offering hardware and software adaptation, leading to its vast market share today.

Chinese chip manufacturers have realized this and are attempting to start with customer service. Since 2023, a domestic AI chip company, preferring to remain anonymous, has had backend R&D personnel serve on the front lines, joining in joint debugging onsite and providing after-sales support. Even for orders worth only six- or seven-figure RMB sums, customers have access to consultation services round the clock.

Providing localized, attentive service alone isn’t enough. Amid Nvidia’s retreat from China, the chip industry is no longer just a product battle but a test of seizing time windows.

Among the Chinese companies making the most aggressive moves is Huawei, which previously partnered with iFlytek to launch the “Xinghuo One” device equipped with the Ascend 910B. This chip, touted as a rival to Nvidia’s A100, has a challenging side behind its shiny facade. 36Kr learned that Huawei spared no manpower costs, deploying hundreds of engineers to help iFlytek tune parameters.

This benchmark case did not go unnoticed and has led numerous large model and internet companies to extend testing invitations to Huawei.

A Chinese chip salesperson was surprised to find that, since last July, high-ranking Huawei managers have been present at every public tender for smart computing centers. “Huawei can now send hundreds of people to support a single project, even losing money on some critical projects to gain revenue from others.”

The anonymous chip company mentioned earlier has also assembled a 200-member ironclad sales team—a rare configuration in the Chinese chip industry. Its sales team started by focusing on the three hottest areas for large model applications: finance, law, and industry, appearing at almost every computing-related event.

A price war among Chinese chip makers has also quietly begun.

An industry insider told 36Kr that the goal is to win more smart computing center orders at any cost. 36Kr observed that some Chinese companies have removed expensive high bandwidth memory (HBM) from inference cards to reduce costs, shipping at prices 50% below cost. “Everyone hopes to break through from various entry points, each taking a small piece from Nvidia, so it no longer stands alone.”

But the reality can be harsh. From a product standpoint, Chinese AI chips inevitably have various problems. 36Kr was told that processing a dataset takes only ten days with an Nvidia A100 cluster, but up to several months with domestically produced chips. The short development time and lack of advanced manufacturing processes have led to inefficiencies in hardware.

Software shortcomings are also evident. Another industry insider found that, when running large models on Chinese chips, trying to make more sophisticated applications could cause frequent crashes. “In many cases, people use domestic chips reluctantly.”

Now, companies are realizing more practical strategies. Some still aim for 1,000-card clusters for training scenarios, directly confronting Nvidia, represented by Moore Threads and Huawei. However, most focus on applying large and small models across various industries, targeting scenarios with lower hardware and software demands, represented by Iluvatar CoreX and Enflame.

“We no longer blindly chase Nvidia. We can’t afford to and dare not blindly make chips with super high computing power,” an industry insider said.

A realistic consideration for Chinese chip makers is that, since Nvidia’s main focus isn’t here, direct confrontations should best be avoided.

Previously, most companies used Nvidia’s consumer-grade gaming graphics cards, the RTX 4090 chip, for inference due to cost considerations. These cards had many issues: high power consumption, insufficient memory, and were banned for use in large model inference by Nvidia. Chinese chip companies are capitalizing on this gap. Enflame and Iluvatar CoreX promote inference cards comparable to the RTX 4090, with large memory, low power consumption, and stable supply.

They also target niche markets, such as power-sensitive scenarios with low-power chips or video optimization and other niche applications, doing small but profitable business.

A battlefield of giants

When Chinese GPU companies claim to “surpass Nvidia” in their presentations, it often represents an aspirational vision rather than current reality. These companies, relatively new and benefiting from the trend of domestic substitution, view even slight advancements as significant achievements. Nvidia serves as both a competitor and a benchmark for them.

However, the atmosphere is more tense when it comes to Intel and AMD, who are on par with Nvidia. “We internally consider Nvidia a mortal enemy,” a developer of AMD’s M1 product line told 36Kr.

At Computex 2024 in June, AMD founder Lisa Su—who is also Nvidia founder Jensen Huang’s cousin—clearly outlined the company’s GPU product strategy: releasing a new GPU every year, matching Nvidia’s update cycle.

Whenever Nvidia launches a new GPU, AMD buys it immediately for disassembly and comparison with their upcoming products.

“Add a feature here, raise a parameter there,” the developer told 36Kr, emphasizing that AMD aims to ensure its hardware isn’t lagging behind Nvidia, and might actually be slightly ahead in specifications.

Since 2023, AMD’s Chinese ecosystem partners have received new software optimization requests from the company almost daily. To promote its GPUs, AMD executives sometimes bundle them with their more advantageous CPUs, even at the risk of selling fewer CPUs.

“AMD’s people are wishing every day, hoping we can build the ecosystem,” said an executive from an unnamed ecosystem builder. Over ten cloud service providers and B2B customers in China are currently verifying and adapting AMD’s chips.

Compared to Chinese chip manufacturers, international giants like AMD and Intel have hardware advantages due to advanced manufacturing processes and high bandwidth memory (HBM) capacity. Consequently, their products can often match or even exceed Nvidia’s in some respects.

Official data shows that AMD’s MI300X, released in December 2023, claimed to have 1.2 times the computing power of Nvidia’s H100. Intel’s Gaudi 3, launched in April 2024, also surpasses the H100 in energy efficiency and inference performance and is cheaper. AMD’s GPUs are about 70–80% the price of Nvidia’s counterparts.

However, companies directly competing with Nvidia face a common challenge: despite their hardware advantages, they lag in software, creating a significant bottleneck. Nvidia’s introduction of the CUDA software platform provided developers with a programming interface to write computational programs using familiar languages on GPUs, creating a deep software ecosystem that is a substantial moat.

“Imagine you learned a language and used it for work for many years. If I ask you to switch to a new language, wouldn’t you find it difficult and resist?” an employee from a chip company explained to 36Kr.

CUDA’s deep ecosystem gives Nvidia constant bargaining power and insight into what their next chip should do. This is something both AMD and Intel struggle with.

A former Intel employee told 36Kr that the company once deployed over 3,000 engineers globally, investing 3–4 years, but only improved precision from 0% to 4%. Using Intel’s chip to convert a human portrait often resulted in a long wait, only to end up with an unrecognizable image.

Since AMD and Intel GPUs have fewer users, their corresponding software platforms, ROCm and oneAPI, also see limited use, making it challenging to fully harness their hardware capabilities.

“Nvidia’s CUDA has so many developers iterating algorithms on it, making Nvidia’s inference and training highly efficient, giving Nvidia constant bargaining power and insight into what their next chip should do. This is something both AMD and Intel struggle with,” said a CEO of a company in AMD’s ecosystem. “Currently, AMD’s software stack, ROCm, is like Nvidia’s CUDA from 20 years ago.”

For downstream customers, this situation presents risks. Validating large models is inherently uncertain. Running them on an unproven chip adds another layer of uncertainty—abandoning Nvidia means bearing significant migration costs and risks.

Nonetheless, for AMD and Intel, the battle against Nvidia is unavoidable.

The global chip architecture market is divided into three realms: the X86 architecture dominates the PC field with Intel and AMD, the mobile market is Arm’s domain, and Nvidia leads the AI market.

In the 18 months since the new AI revolution began, Nvidia’s market cap once surpassed USD 3 trillion, seven times the combined value of Intel and AMD.

After two decades, the giants are once again engaged in a fierce battle to challenge Nvidia, a late but necessary counterattack.

When real cracks appear

As Chinese AI chip companies form an “ant army,” and AMD and Intel go all out, has Nvidia, besieged on all sides, really been shaken?

Nvidia’s empire does show signs of cracking.

A signal Nvidia must heed is that major customers heavily invested in AI, such as OpenAI, Google, and Microsoft, are taking their first steps toward a post-Nvidia world. Self-developed chips have long been part of their strategy. A former core member of Google’s TPU team told 36Kr that Google, which uses a quarter of the world’s computing power, “might stop external chip procurement by the end of the year.”

Previously, Google’s in-house TPU development was mainly cost-driven, worrying about Nvidia’s price hikes or unstable supply. Now, Google’s chip strategy is more aggressive—investing almost regardless of cost.

OpenAI has many backup plans, intending to raise as much as USD 7 trillion to establish a new AI chip empire.

In China, 36Kr learned from multiple sources that Alibaba, ByteDance, and Baidu—Nvidia’s largest buyers in the country—are secretly developing chips for large model training.

However, self-developed chips are a long-term solution. In the short term, these major customers are also exploring products from Nvidia’s competitors to reduce dependence on Nvidia.

AMD is a prominent plan B. An AMD insider told 36Kr that AMD’s GPU products have already opened up the large customer market in Europe, the US, and South Korea. Microsoft has ordered tens of thousands of AMD products, and Tesla, Midjourney, the US National Laboratory, and KT Corporation have also made bulk purchases.

In China, 36Kr was told that hundreds of units of a specific AMD accelerator card had been shipped in 2023. Although this number is modest, this product was said to be virtually unknown in China. AMD previously estimated that by the end of 2024, data center GPUs would bring in up to USD 2 billion in revenue.

AMD previously estimated that by the end of 2024, data center GPUs would bring in up to USD 2 billion in revenue.

Though Chinese chip companies have yet to pose a substantial threat to Nvidia, they show promising progress. 36Kr learned that the sales of domestic training and inference chips have reached a new level. One encouraging sign is that hardline customers in the internet sector and large model industry are opening up to domestic chip manufacturers. For instance, Huawei’s Ascend chips have made a breakthrough into Baidu’s ecosystem.

Additionally, Chinese AI companies such as Zhipu AI, MiniMax, and StepFun are training trillion-parameter models. Due to the limited availability of high-end Nvidia chips, these companies often adopt a mixed training approach, using a mix of Nvidia and other chips. For example, nearly half of Zhipu AI’s cluster comprises Ascend chips.

Furthermore, Enflame and Iluvatar CoreX have shipped tens of thousands of inference chips since last year, with distribution channels including major smart computing centers in China. Enflame has entered Baichuan Intelligence’s supply chain, and Huawei has shipped 30,000–50,000 units of Kunlun chips over its past two generations, with about half coming from external channels.

“Nvidia’s current pricing and supply levels are testing everyone’s limits,” an industry insider said.

Looking 3–5 years ahead, Nvidia faces new emerging threats. The industry is witnessing the rise of AI chip architectures beyond GPUs. For instance, Groq, a Silicon Valley chip company with a layer processing unit (LPU) architecture, claims to run large language models ten times faster than Nvidia’s GPUs. Another chip company based in Silicon Valley, Etched, has released application-specific integrated circuit (ASIC) chips, also claiming speeds an order of magnitude faster than Nvidia’s GPUs. These startups are backed by investors like OpenAI.

In China, new AI chip startups have also emerged this year. Shanghai has recently supported the establishment of two new AI chip companies. Yanggong Yifan, CEO of domestic TPU company Zhonghao Xinying, said that GPUs have an overall transistor utilization rate of only 20%, revealing significant flaws. In contrast, new architectures like TPUs and ASICs, though less versatile, can achieve 60–100% transistor utilization. “In the next 3–5 years, many AI chips beyond GPU architecture will undoubtedly appear.”

These sparks may just be enough to shake Nvidia.

“Do you think Nvidia is unbeatable with no rivals? It’s not,” a Nvidia employee told 36Kr, adding that Huang often says “we are only 30 days away from bankruptcy.”

Nvidia has prepared for over a decade and collaborated with visionary companies like OpenAI to create its miracles. Yet, the semiconductor industry is rife with stories of giants overtaking others on sharp bends.

The battle to encircle Nvidia has already ignited. Who will come out on top?

KrASIA Connection features translated and adapted content that was originally published by 36Kr. This article was written by Qiu Xiaofen for 36Kr.