Imagine that you or a loved one is in need of a critical medical procedure. The doctors have already done everything they can, but they need to make a decision quickly, and there are a few possible courses of action. They consult with each other and review all the available data, but in the end, they turn to a computer program to help them make the final call. How do you feel about putting your life in the hands of an algorithm?

Artificial intelligence (AI) has become increasingly prevalent in healthcare in recent years. From detecting diseases to diagnosing patients to personalized treatment plans, AI is transforming the way that healthcare providers work. However, as with any new technology, there are ethical considerations to take into account when it comes to using AI in healthcare.

AI Healthcare Companies Leading the Charge in SEA

AI’s influence is visible across Southeast Asia in various healthtech startups. Companies like Doctor Anywhere and MyDoc in Singapore offer AI-powered triage and remote monitoring services, connecting patients and healthcare providers virtually. Healint, also from Singapore, leverages AI to manage chronic migraines through an app to track all aspects of associated pain, including triggers, symptoms, medications, and pain intensity, while Naluri in Malaysia provides AI-backed personalized health coaching for patients with chronic conditions.

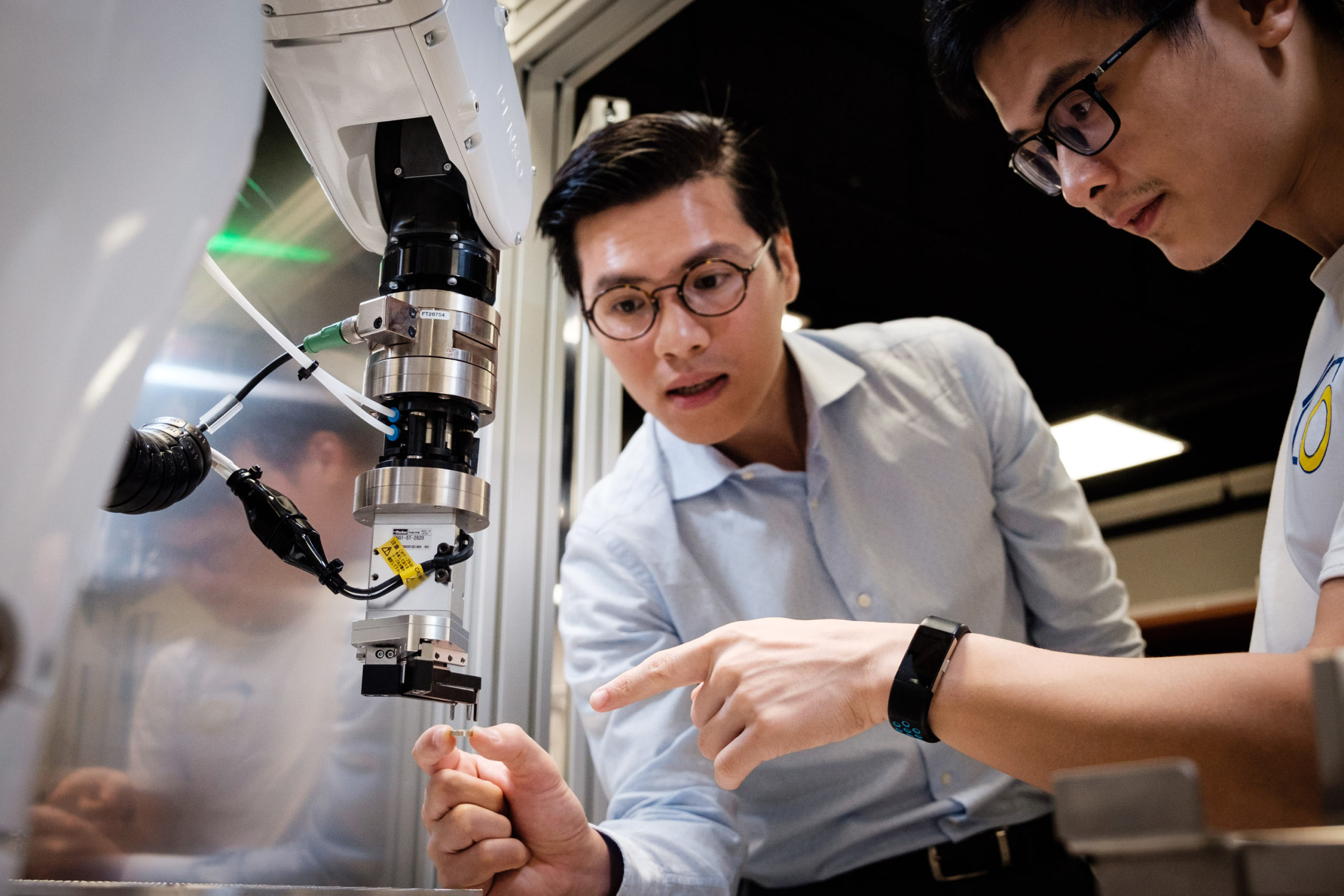

VinBrain, a Vietnamese AI company, is making significant strides in medical image analysis, disease diagnosis, and clinical decision support systems. Steven Truong, VinBrain’s CEO, emphasizes their commitment to creating universally applicable software that bridges AI and human intelligence for the benefit of global health outcomes.

Navigating Ethical Challenges: Patient Data Privacy and AI Transparency

The potential benefits of AI in healthcare are vast, but it is essential to navigate the ethical minefield surrounding privacy and security to ensure that the technology is implemented responsibly. For example, patients should be made aware of the specific AI algorithms used in their care, how these algorithms were developed, and any potential biases or limitations associated with them. This empowers patients to make informed decisions about their treatment and ensures they maintain control over their personal information.

Another major ethical concern in AI-powered healthcare is maintaining patient privacy. While the use of AI in healthcare can lead to improved diagnoses and treatments, it also raises questions about who has access to the data used to train these algorithms and how this information is being shared and utilized.

At VinBrain, the safeguarding of patient privacy is paramount. The company has stringent measures in place to ensure the anonymization of medical records and other patient data before they are used in training, validation, and testing processes. Recognizing that automatic processes may not always be foolproof, VinBrain also conducts manual reviews of random training data during the validation stage and at runtime. This two-pronged approach acts as a monthly audit system, ensuring the utmost protection of patient data as the company strives to enhance healthcare outcomes.

Another possible solution to address privacy concerns is the adoption of privacy-preserving techniques, such as federated learning and differential privacy. Federated learning enables AI models to be trained on decentralized data sources without actually sharing the raw data, thereby protecting patient privacy. Differential privacy adds a level of statistical noise to data, ensuring that individual data points cannot be identified while still allowing AI algorithms to learn from the overall trends and patterns.

Trust and AI: The Bias Problem

One of the most pressing issues is that of trust. Can we trust algorithms to make decisions about our health? The answer is not a simple yes or no. On the one hand, AI has the potential to improve patient outcomes by providing more accurate and efficient diagnoses and treatments. For example, in 2020, researchers at Imperial College London developed an AI system that can diagnose heart disease from CT scans with almost 90% accuracy. This is a significant improvement over traditional methods, which can be time-consuming and less accurate.

On the other hand, there are concerns about the potential for bias and errors in AI algorithms. These algorithms are only as good as the data that they are trained on, and if that data is biased or incomplete, then the algorithm will be as well. This can lead to inaccurate diagnoses and treatments that could put patients at risk. For example, in 2019, a study found that a popular commercial algorithm used to guide medical care for patients with complex health conditions was less accurate for Black patients than for white patients.

Companies like VinBrain are working to tackle potential bias in AI algorithms by using diverse training data from people worldwide. Human experts label the data, creating a knowledge base for AI training. To standardize interpretation and minimize bias, VinBrain collaborates with top institutions and doctors from Stanford, UCSD, Harvard, and Vietnam, ensuring accurate and inclusive AI tools for all patient populations.

Enhancing AI Transparency and Accountability

There are also concerns about the lack of transparency and accountability when it comes to AI in healthcare. Unlike human doctors, algorithms cannot explain their reasoning behind a particular decision. This can make it difficult for patients to understand why a certain treatment was recommended or to challenge a decision if they believe it was incorrect.

Truong emphasized the importance of transparency and the necessity for AI to be explainable. He suggested that all metrics and detection descriptions should be communicated in simple, everyday language, just like a conversation between a doctor and a patient. He further stressed that the language of AI should be universally understood, making it accessible to everyone.

So, what can be done to address these ethical concerns? One solution is to implement rigorous testing and evaluation of AI algorithms before they are used in clinical settings. This can help to identify any biases or errors in the algorithm and ensure that it is as accurate and reliable as possible. Additionally, there should be transparency about how these algorithms are developed and how they are being used in patient care.

Another important step is to ensure that AI algorithms are developed with diversity and inclusivity in mind. This means using data from a diverse range of patients to ensure that the algorithm is accurate for all populations. It also means involving patients and healthcare providers from diverse backgrounds in the development process to ensure that the algorithm is sensitive to cultural and linguistic differences.

Moving Forward: Ensuring Ethical AI in Healthcare

It’s fair to say that the use of AI in healthcare has the potential to revolutionize the industry and improve patient outcomes. However, it is essential that we approach this technology with caution and address the ethical concerns that come with it. By implementing rigorous testing and evaluation, ensuring transparency and accountability, and developing algorithms with diversity and inclusivity in mind, we can ensure that AI is being used responsibly and ethically in healthcare.

When asked for his final thoughts on AI’s role in healthcare, Truong said, “AI serves as an invaluable tool in hospitals, facilitating quick and accurate decision-making, ultimately leading to superior patient outcomes. It’s about augmenting the intelligence of the real decision-makers — the doctors. We must understand that AI isn’t a decision-maker in itself; rather, it functions as a robust decision-support system.”

All opinions expressed in this piece are the writer’s own and do not represent the views of KrASIA. Questions, concerns, or fun facts can be sent to [email protected].