A little over a year ago, Wu Hua was traveling in South Korea, enjoying a getaway like millions of tourists do. She snapped a photo, tagged the location, and posted it on social media without a second thought. Days later, back home, a friend sent her a screenshot that would shatter her peace of mind: someone had created an account almost identical to hers, complete with her profile picture and username. But there was one crucial difference — the account’s bio was in Korean, and it included a suspicious link.

Curiosity turned into horror when Wu clicked the link. It led to a pornographic site, where vile, paid videos filled the screen. Worse yet, the faces in these videos had been replaced with hers, all courtesy of artificial intelligence technology.

In late August, when Wu first read about South Korea’s deepfake scandal, the fear that had been simmering inside her boiled over. “It was disgusting, horrifying,” she told 36Kr.

Wu still chokes up when recalling the first time she clicked on one of these AI-generated videos. Although she managed to report the fake account and have it deleted, she remains unsure if her face is being used elsewhere.

Deepfake technology—the use of AI to generate synthetic media in formats such as images and videos—has triggered what’s now being called the “Nth Room 2.0 case,” throwing South Korea into turmoil. Countless women have become the targets of men using AI to victimize them.

A male student from Inha University created a chat room with over 1,200 members. Using deepfake technology, they placed the faces of female students onto pornographic videos and openly shared their contact information in the chat.

This conspiracy, which began at Inha University, unraveled quickly. Since August 20, Korean media and police have uncovered a wave of similar chat rooms created by individuals from schools, hospitals, and even the military.

The largest of these chat rooms reportedly had up to 220,000 members. Across South Korea, nearly every middle school and university has similar “abuse rooms.”

In these group chats, men use women they know in real life as targets for humiliation. Using various deepfake tools, they swap women’s faces into obscene images and videos. The men in the groups then engage in collective verbal abuse, which often escalates into offline threats and harassment.

Larger abuse rooms are even subdivided into more specific categories, such as “family abuse rooms,” where men upload secretly taken photos of their mothers, sisters, or daughters for public shaming.

In 2019, when the original “Nth Room” case shocked the world, one internet user sarcastically remarked, “There are only 260,000 taxis in all of South Korea, meaning your chances of meeting an Nth Room member on the street are as high as bumping into a taxi.”

This statement is even more chilling today, as deepfake chat rooms spread unchecked.

While the original Nth Room case primarily involved voyeuristic footage that had to be filmed by the perpetrators themselves, the rapid advancement of deepfake technology has drastically reduced the cost of such crimes. AI-generated images and videos are now so realistic that distinguishing them from real content is becoming increasingly difficult.

In May this year, a 40-year-old Seoul National University graduate with the surname Park was arrested for using photos of 48 women to produce 1,852 pieces of sexually exploitative content. All the victims were women he knew personally.

A teacher in Seoul discovered she was the target of one of these abuse rooms, and the perpetrator was one of her students, though she couldn’t determine which one.

“Will that photo follow me for the rest of my life?” she tearfully asked the media.

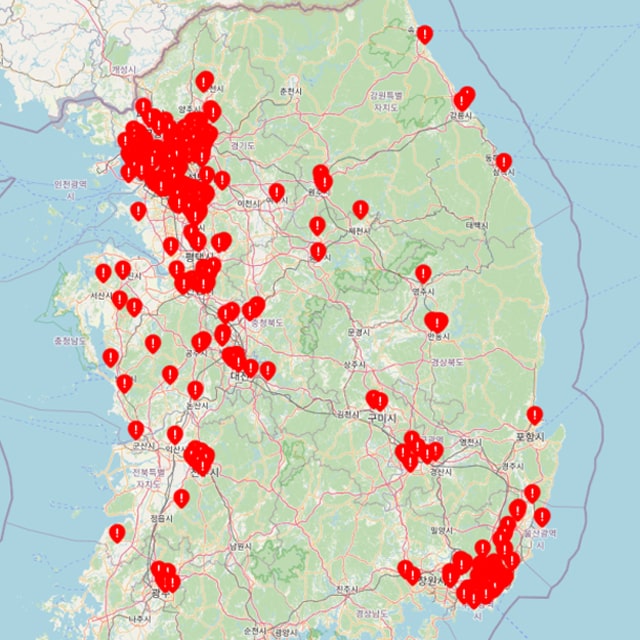

After the scandal broke, volunteers created a map of offenders based on media reports and anonymous tips. The map is covered in red markers, nearly engulfing central South Korea.

According to cybersecurity monitoring organization SecurityHero, 53% of the world’s deepfake pornographic content in 2023 originated from South Korea—an alarming statistic considering the country’s GDP accounts for less than 2% of the global total.

Confronted by despair and outrage, South Korean women have been fighting back amidst an oppressive public climate.

Fighting a silent battle

No one knows how many women have fallen victim to this. Every day, college student Ji-young (pseudonym) gulps down large cups of coffee to stay awake. “I’m afraid of missing any reports from victims.”

Since late August, she has barely slept.

A battle is being waged.

On X (formerly Twitter), Ji-young is constantly at odds with South Korean men. Her account has long been monitored in real time by these men. Whenever she posts a URL calling for others to report misogynistic content, the link is altered within minutes. This suggests that misogynists are constantly watching her tweets.

In her conversation with 36Kr, Ji-young used a voice changer—all the women active on X in connection with this case have become the primary targets of Korean men’s doxxing campaigns. She couldn’t reveal any personal information.

In South Korea, where women’s rights are already marginalized, being labeled a feminist is dangerous. The largest feminist Twitter account, “Queens Archive,” was shut down after male users mass reported it for exposing the deepfake chat rooms.

“Sometimes we don’t bathe or sleep, staying up until 3 or 4 a.m,” Ji-young told 36Kr. She and her comrades on X are urgently translating all information related to deepfaking, victim protection guides, and feminist literature into English, sharing it nonstop.

Both the perpetrators and victims in this deepfake case are younger than those in the original Nth Room. From January to July 2024, South Korea prosecuted 297 cases of deepfake-related sexual exploitation, arresting 178 suspects. Of these, 131 were teenagers, making up 73.6% of the total.

Many are calling this the Nth Room 2.0 case, but it hasn’t garnered nearly the same level of public attention. To many women, the response from the police and government have been passive at best.

Because AI-generated content is regarded as “not real,” it initially went unnoticed by law enforcement.

“The government failed to handle the original Nth Room case properly. Now, many perpetrators of deepfake are minors, as are many of the victims. The perpetrators don’t believe there will be consequences, and they don’t fear jail time,” Ji-young told 36Kr.

To date, South Korean police have only arrested around 15 people, and some offenders have fled overseas to avoid prosecution by immigrating.

“The deepfake case, along with prior cases like the Nth Room and Soranet, is estimated to have over a million victims. No one knows the exact number,” Ji-young lamented. “If we don’t apply pressure on the government quickly, they won’t act at all.”

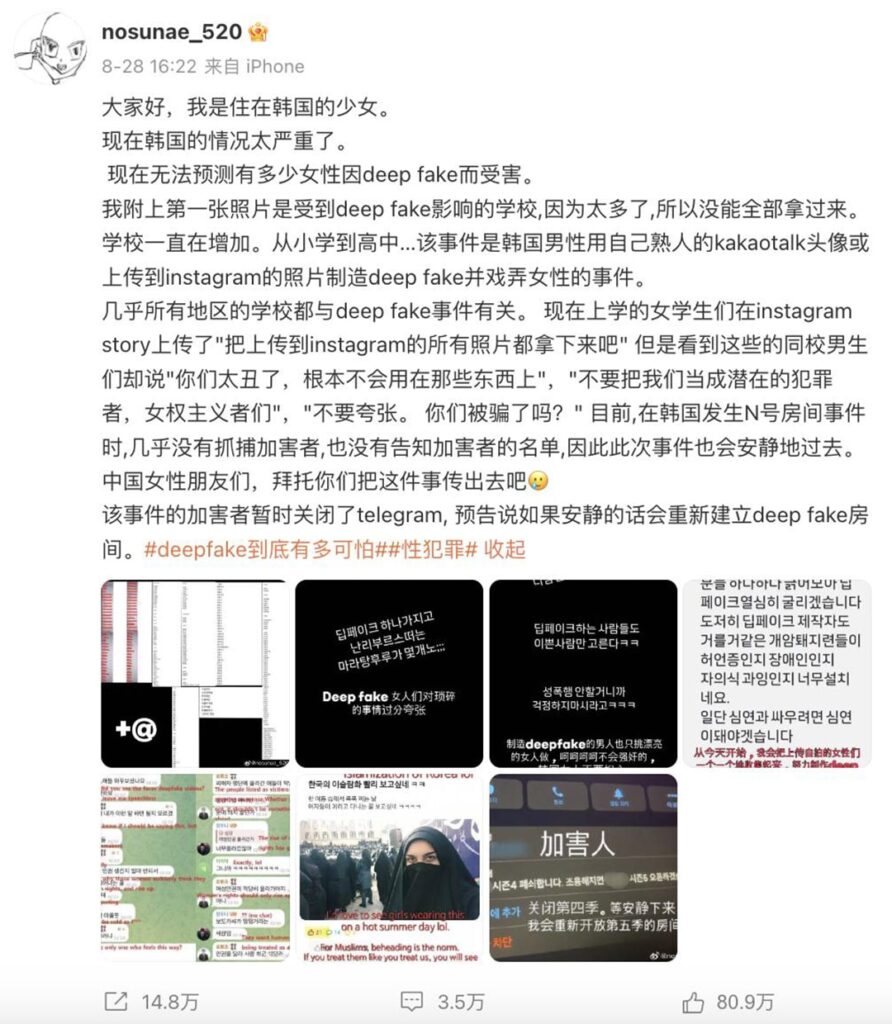

South Korean women have begun seeking help from overseas, relaying news of the deepfake scandal to social media users in China and Japan.

Eun-jung (pseudonym), a South Korean college student, took to Xiaohongshu, using awkward machine translations to plead for attention and spread the word, despite repeated account bans. On Weibo, posts from Korean women crying for help were shared nearly 150,000 times in just two days.

No one knows where these efforts will lead. Eun-jung told 36Kr that her younger sister is now a middle school student. Aside from the school advising girls to protect themselves, no other measures have been taken. She and her sister have deleted all photos with their faces from social media and now wear long pants every day.

It wasn’t until the issue exploded internationally that the South Korean government finally began taking it seriously. Recently, various political parties in South Korea have publicly addressed the deepfake scandal, calling for immediate resolution.

The ubiquity of deepfake abuse rooms

What would it take for an ordinary Chinese man with no AI expertise to become a deepfake perpetrator?

According to SecurityHero’s report, China accounts for 3% of the world’s deepfake pornographic content. Although this is much lower compared to South Korea (53%) or the US (20%), the actual scale of China’s deepfake porn industry is likely much larger.

A quick search for “deepfake” on X reveals Chinese sellers offering tools with lurid keywords like “AI undressing,” “bondage,” and “bikini.” However, their profiles clearly state that they only provide the tools and are not responsible for their usage.

Platforms like X, Instagram, and Facebook are just the keys to opening Pandora’s box. Many Chinese sellers discreetly use terms like “vx” and “zfb” to hint at the real platforms where transactions take place: WeChat and Alipay, among others.

36Kr contacted one seller through a QQ number listed on X. To evade platform scrutiny, his status message stated explicitly that usage is for entertainment purposes only, and that illegal content is strictly forbidden—users bear responsibility for any consequences. Throughout the conversation, the seller maintained the guise of a regular software vendor, never mentioning anything sexual.

But sex was the unstated purpose of the transaction. Soon, the seller used QQ’s “flash photo” feature to send two pixelated deepfake images, which disappeared after three seconds.

Even within China, subjecting women to cyber shaming and escaping legal punishment is disturbingly easy.

AI has made the cost of committing online sexual violence lower, while increasing the speed at which it spreads. According to the seller’s price list, a 10-second AI-generated face swap video costs just RMB 30, and an AI-generated nude photo is only RMB 10. Discounts are even available: buy five, get one free.

Originally, deepfake technology was built on generative adversarial networks (GANs), which were relatively harmless.

GANs, first developed in 2014 at Google Brain, initially had all the barriers to remain niche: the software was difficult to install, the technical requirements were high, and the training datasets and computing power were expensive. For a time, deepfaking remained confined to laboratories, out of reach of the general public.

But things began spiraling out of control around 2019.

That year, an app called DeepNude claimed it could undress anyone in 30 seconds. Ironically, the app was trained on 10,000 images of naked women, but if a user input a male photo, the software would add female genitalia instead of undressing him.

The rapid advancement of GAN technology and the rise of open-source software have reduced the barriers to deepfaking. An anonymous male programmer quickly built DeepNude based on an open-source GAN framework from UC Berkeley.

On one side, AI technology was progressing rapidly, while on the other, society and regulatory systems were unprepared for the impact of its unchecked growth.

DeepNude made creating non-consensual nude photos as simple as clicking a button. Under public pressure, the app was taken down just two weeks after its release. The anonymous developer, known only as Alberto, explained that the app stemmed from “curiosity” and “technical interest.” “I’m not a voyeur—I’m a tech enthusiast,” he said.

Although DeepNude was taken down, countless versions of the app, now more hidden, easier to use, and producing higher-quality images, have flooded the internet.

On Discord forums, the installation package for DeepNude is sold for USD 20. Anonymous sellers claim they have improved its stability and removed the watermark that the original app added to the generated images, making the deepfake nudes look even more realistic.

Within three weeks of DeepNude’s takedown, the app was retooled into a one-click undressing bot embedded in Telegram.

From there, events unfolded similarly to today’s Nth Room 2.0 case in South Korea. Users began creating groups on Telegram, and more than 100,000 people became early members of this new Nth Room.

Sensity, a cybersecurity company that first detected the bot, found that it had generated more than 680,000 fake nude images of women in less than a year. Approximately 104,852 photos were publicly shared on the platform.

The downward spiral of women’s legal struggles

Since 2019, efforts to fight back against DeepNude have been ongoing.

In July 2019, the US state of Virginia passed a law banning the sale or distribution of computer-generated pornographic content. By the end of the year, platforms like X, Google, and Facebook began implementing measures to curb deepfake videos, such as adding watermarks and sponsoring research into detecting deepfakes.

However, the ongoing existence of deepfake chat rooms, whether in South Korea’s Nth Room 2.0 or in China’s underground market via platforms like QQ, Alipay, and Xianyu, reveals a stark truth: deepfaking has never really disappeared.

In 2019, The Citizen Lab at the University of Toronto conducted a study exploring how WeChat automatically censors large volumes of images in chats. The results showed that WeChat’s scrutiny is stricter in group and moment chats than in one-on-one private conversations, with political and social news being the core focus of its image censorship.

This indicates that platforms still have significant gaps in their regulation of pornographic content. Deepfake transactions conducted in private chats are unlikely to be disclosed unless actively reported.

But can people always take the initiative to come forward?

Zhang Jing, the deputy director of a leading law firm in Beijing, has handled many cases involving women’s rights violations over the past two decades. She has fought for women against individual perpetrators, systemic injustices, and loopholes in the legal system. But the rise of deepfake sexual exploitation has left her feeling that women’s struggles have entered a new and more complex phase.

“In the past, when technology wasn’t as advanced, at least victims could identify their violators, and the harm could be quantified through forensic examinations,” Zhang told 36Kr. “But now, both the creators and distributors of deepfake content are faceless and untraceable, making it extremely difficult to gather evidence or determine sentencing.”

Many online bystanders ask why victims are not just reporting it to the police. Even some female victims initially bought into the idea.

But before reporting it to the police, women must first gather a considerable amount of evidence. “To file a criminal case, basic evidence is required,” Zhang said.

Such evidence includes the specific websites where the deepfake content was shared, a sizable number of infringing images, and a significant extent of distribution.

“For those who don’t use the internet often, collecting such evidence isn’t easy. Some of these women may not even know how to access the websites in question,” Zhang continued.

Even when women manage to report a case and have it filed, the legal outcome for the perpetrators is often unsatisfactory.

A search for “AI face swapping” on the China Judgments Online database yields only 16 court documents, with the earliest case appearing in 2022. In the past two years, there have been no criminal cases involving deepfake violations.

Faced with these obstacles, women’s fight against deepfaking has become a downward spiral: societal pressure and strict case-filing requirements lead to fewer women willing to come forward. This lack of voice and the low case numbers, in turn, make it harder to push for legislation.

In December 2023, a court ruling in Taiwan brought some hope for deepfake victims. An internet celebrity named Zhu Yuchen used deepfake technology to create and sell pornographic videos featuring over 100 well-known women, earning over TWD 13 million. He was sentenced to five years in prison for violating the territory’s Personal Data Protection Act and other charges.

But is five years enough?

“In the past, pornographic videotapes and 3D films were a specific niche industry, and their spread was unlikely to cause harm to a living person,” Zhang said.

“But the essential difference between today’s deepfakes and the pornography industry of the past is that its spread will cause harm to specific people in real life,” Zhang added. This means that “the sentencing for deepfakes based on the dissemination of obscene and pornographic content will inevitably be too light.”

The perpetrator faces a foreseeable five-year sentence, but the trauma may accompany women for a much longer period of time.

When she was 15, Xiaoyu (pseudonym) began to not want summer to come after being subject to deepfake harassment from a boy sitting behind her. Once the white short-sleeved school uniform was soaked with sweat, the girl’s underwear and body curves would be visible. In the high temperature of nearly 40 degrees Celsius, she still chose to wear two short-sleeved shirts to block the boy’s sight.

Xiaoyu told 36Kr that she felt that telling the teacher and parents was futile. She remembered that some boys in her class had made dirty jokes about girls or pulled the straps of their underwear, but in the end the teacher responded that it was just a joke between children.

Now, more than a year has passed since the harassment. After entering high school, Xiaoyu lost contact with the boy sitting behind her, and her life returned to normal. However, Xiaoyu still keeps the habit of wearing two short-sleeved shirts in summer.

Is humanity ready for deepfaking technology?

In the wake of the South Korean deepfake scandal, Li Qian, co-founder of Avolution.ai, was furious. She dragged her co-founder into an all-night brainstorming session, and by the end, both were exhausted and hoarse. “I hate to say this, but the bad news is that there’s no way to completely eliminate Deepfake sexual violence through technology,” Li told 36Kr.

While it’s nearly impossible to erase deepfakes entirely, there is a next best option: turning the tables and giving perpetrators a taste of their own medicine.

Xiao Zihao, co-founder of RealAI, explained how his company regularly conducts “red versus blue” exercises, where engineers are split into two teams: the red team, which simulates real-world attacks, and the blue team, which works to defend against them. Xiao revealed that red team engineers have infiltrated cybercriminal networks to gather intelligence, continuously innovating their strategies.

For those combating deepfake with AI, the job is a constant battle against new, unforeseen cases. Tweaking new software models every two weeks and iterating full-scale models every two months has become the norm.

However, most of this work focuses on early warning and prevention. When real violations occur, protecting women requires the firm hand of the law.

In November 2022, China introduced its first regulation specifically targeting deepfake technology. This law governs the creators, service providers, and distributors of deepfake content.

But Zhang, as a veteran lawyer who has spent 20 years fighting for women’s rights, said there’s a long road ahead before the law can be effectively enforced. Over her career, she has noticed a clear structural issue: most of the police officers, lawyers, and judges involved in handling these cases are men. “Even the legislative bodies are predominantly male,” she said, citing this as one of the reasons for weak sentencing and poor legal enforcement.

Zhang recalled attending a forum on the abolition of lenient sentences for soliciting underage girls. At the event, many male lawyers argued that changing the law to classify such acts as rape might drive offenders to resort to more dangerous methods, endangering victims even further.

In most cases where perpetrators have been detained and convicted, it’s largely thanks to women’s proactive efforts.

In 2023, during an AI face-swapping incident at Soochow University, the victim infiltrated the chat room where the deepfaked images were being shared. Using a methodical process of elimination, she posted photos with different tags across multiple groups, gradually narrowing down the suspects. Her persistence eventually led to the identification and capture of the perpetrator.

To advance legislation and public awareness, landmark legal cases are needed.

“With legal precedents, this history of women’s victimization could be included in school textbooks, pushing gender education forward,” a feminist activist in Japan said.

Given the current AI industry to be a male-dominated field, some also believe that, to some extent, from R&D to product implementation, women are at greater risk of becoming a demographic “oppressed” by AI.

For deepfake legislation to advance meaningfully, society must also grapple with the industry’s darker undercurrents. According to Avolution.ai’s Li, adult content makes up a significant portion of AI training data—as much as 40% for internet-sourced video models, with 80% of that content featuring women’s bodies.

“Pornography is the most commercially successful business model for AI consumer products today,” an investor told 36Kr. For some developers, the economic incentives are simply too lucrative to resist.

Challenging this industry inertia is extraordinarily difficult. During Avolution.ai’s fundraising rounds, several investors, both male and female, suggested that relaxing restrictions on adult content would make commercialization smoother.

As the co-founder of a startup committed to ethical AI use, Li has refused to follow this path. “It would be easier to commercialize if we didn’t limit adult content, but once we open the floodgates, bad money will drive out the good,” she said.

From Li’s view, the future of AI would look different if more women held leadership roles in the tech industry. On September 4, Zhang Xinyi, a female engineer at the Chinese Academy of Sciences, took a step toward that vision. Her team made their deepfake detection model open-source, allowing anyone to use it for free.

In a Weibo post, Zhang wrote: “If one person’s torch is too small, we’ll pass the flame to everyone in the world.”

Zhang meant to highlight the belief that, while individual efforts may seem small, sharing and uniting with others can amplify their impact, creating a collective force for change.

Indeed, when new technology rapidly evolves, and the law struggles to keep pace, it’s the collective voices and actions of ordinary people that ultimately push society forward.

South Korean women are a living testament to this. In a deeply patriarchal society, every step forward for women’s rights has been hard-won through battles marked by blood and sweat. Since 2015, South Korean women have led a series of feminist movements tackling issues like MeToo, online sexual violence, and the decriminalization of abortion. These movements have been pivotal in shaping public opinion and changing laws.

Despite enduring relentless online abuse and harassment, the two female journalists who exposed the original Nth Room case, Kang Kyung-yoon and Park Hyo-sil, have persisted in their fight. One of them even suffered multiple miscarriages due to the mental toll of the attacks.

Yet, the women of South Korea have never stopped pushing forward. After the Nth Room case, RESET, a women’s organization focused on combating sexual exploitation crimes, was established. RESET has been instrumental in the creation of new laws targeting digital sex crimes. Earlier this year, one of the university students who helped expose the Nth Room played a key role in bringing a deepfake perpetrator to justice.

“We can’t stop, and we can’t give up,” Ji-young told 36Kr, reflecting the sentiments of women on the frontlines of the deepfake crisis. “This isn’t just a fight for our generation, but for the future of all women. I want a world where my friends, my family, and all women feel safe.”

Women across the globe are now joining forces in solidarity with South Korea. On September 3, a group of Chinese feminists living in London marched from Trafalgar Square to the South Korean embassy in London. Singing a feminist anthem, they protested South Korea’s deepfake and voyeurism scandals.

Emblazoned in bold red on their banners were the words: “My life is not your porn.”

KrASIA Connection features translated and adapted content that was originally published by 36Kr. This article was written by Deng Yongyi, Zhou Xinyu, and Qiu Xiaofen for 36Kr.