Despite Tesla having never publicly revealed the intricacies of FSD V12 before, China has seen a surge of interest in end-to-end (E2E) autonomous driving technology. Li Auto, one of the leading players in the smart driving market, is no exception. On July 5, Li Auto unveiled its E2E self-driving technology architecture for the first time.

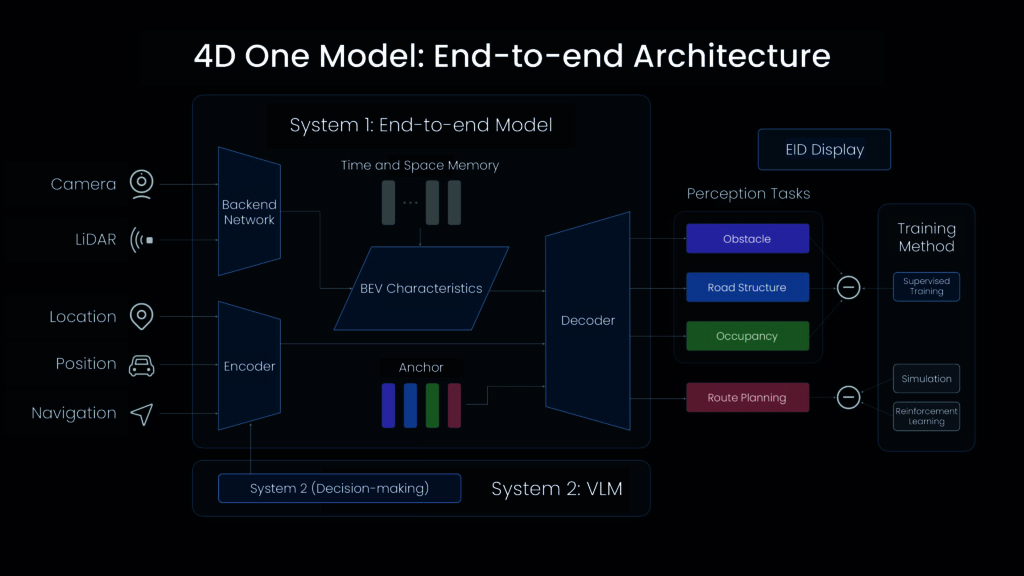

Li Auto’s architecture consists of three main components: an E2E model, a vision language model (VLM), and a world model. Li Auto has also launched an early bird testing program for this new technology.

Industry insiders note that, compared to Huawei and Xpeng Motors’ segmented E2E solutions, Li Auto’s approach is more aggressive. “From sensor input to driving trajectory output, it only goes through one model,” said Jia Peng, head of intelligent driving technology R&D at Li Auto, during the press conference.

Additionally, Li Auto’s new technical architecture integrates the VLM and world model to tackle complex urban roads and address testing and verification issues in E2E solutions.

Lang Xianpeng, Li Auto’s head of intelligent driving, said on social media that the E2E solution has been incubating and pre-researched internally since the second half of 2023. The model has now completed prototype verification and real vehicle deployment.

According to 36Kr, Li Auto has assembled a 300-person R&D team focused on E2E technology, working in closed environments in Beijing, with plans to achieve phased results within the year.

Industry insiders have expressed concern that the technological paths in the smart driving market could be switching too quickly. While 2023’s mainstream solution was urban smart driving with lightweight, high-precision maps, the focus has moved to E2E solutions. This rapid shift presents a challenge for development teams, as the previous generation’s solutions are not yet fully implemented before the next arrives.

However, these technological shifts also create opportunities for latecomers like Li Auto to catch up. At least in terms of E2E solutions, Li Auto is on a similar starting line with its peers.

A step behind Tesla, a step ahead of segmented end-to-end solutions

Tesla led the way with end-to-end autonomous driving solutions. Given enough high-quality data fed into a neural network, artificial intelligence can be applied for systems to autonomously learn human driving methods. According to Tesla, end-to-end constitutes the ability to output driving commands by relying solely on image inputs.

Compared to orthodox smart driving solutions, E2E offers a higher technical ceiling as it does not rely on engineer-defined rules, as is the case with the former, with perception, decision-making, and planning modules working together to achieve smart driving.

However, each module operates independently, and the information interfaces between modules, defined by engineers, can lead to information loss and errors. This impacts the overall effectiveness and relies heavily on human intervention for endless corner cases, which is unsustainable.

E2E solutions appear to be a promising remedy. Having made significant advances in this direction with FSD V12, companies like Huawei, Xpeng, Nio, Momenta, SenseTime, and DeepRoute.ai are all striving to keep pace with Tesla’s advancements.

As the first domestic automaker to publicly disclose its E2E technical solution, Li Auto’s approach offers valuable insights.

Li Auto’s E2E “One Model” structure, with sensor information as the input and driving trajectory as the output, is not unique. Previously, SenseTime’s UniAD, a similar E2E autonomous driving solution, won the “Best Paper Award” at the Conference on Computer Vision and Pattern Recognition (CVPR) 2023.

Li Auto believes that, without intermediate rule intervention, its E2E model has advantages in information transmission, inference computation, and model iteration. It is said to boast stronger understanding of general obstacles and road structure, beyond visual range navigation, and more human-like pathfinding capabilities.

Technically, Li Auto takes a step further than Huawei and Xpeng’s segmented E2E approaches. Previously, Huawei’s E2E solution included large perception and pre-decision planning networks. Xpeng’s E2E solution was divided into the neural network XNet, planning and control large model XPlanner, and large language model (LLM) XBrain.

However, industry technologists told 36Kr that training the One Model solution presents significant challenges. “Previously, when training planning and control, the perception module was assumed to be perfect, and both were trained independently. Problems were easier to pinpoint. But with the end-to-end solution, perception and planning are trained together, which can lead to negative optimization.”

Compared to Tesla’s E2E solution, Li Auto’s solution seems to have fallen short.

Currently, in China, the most advanced E2E solutions extend from perception to the prediction and decision-making end. The final control execution module is still backed by handwritten rules from engineers.

Vision language models: Helping smart vehicles understand the world

An interesting aspect of Li Auto’s end-to-end solution is the introduction of fast thinking and slow thinking, inspired by Nobel laureate Daniel Kahneman’s theory:

- Li Auto sees the fast system, or “System 1,” as adept at handling simple tasks, much like human intuition formed through experience and habits, sufficient for 95% of routine driving scenarios.

- The slow system, or “System 2,” represents human logical reasoning, complex analysis, and calculation abilities, used to tackle complex or unknown traffic scenarios, accounting for about 5% of daily driving.

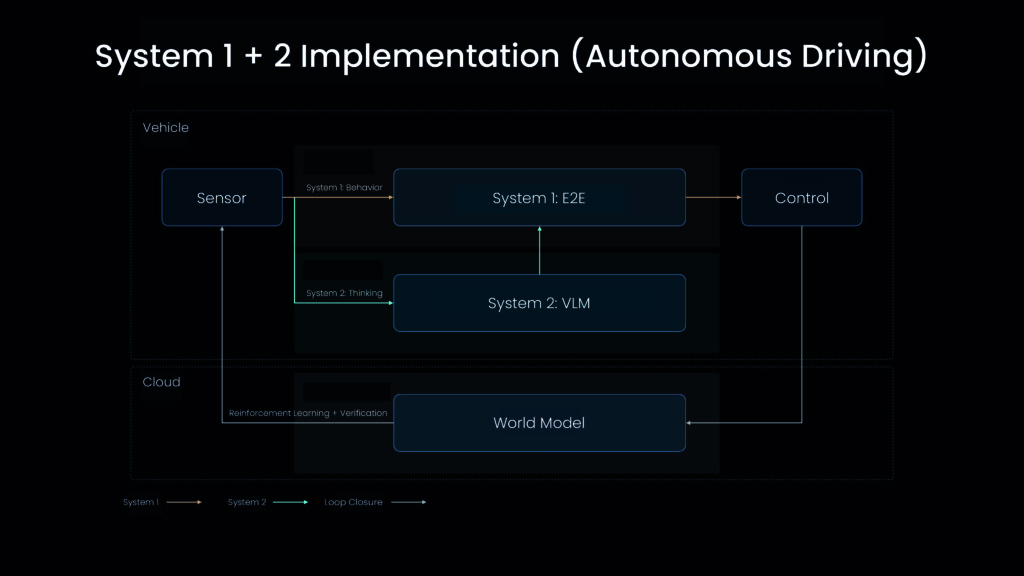

Li Auto has built its architecture while borrowing from this theory: System 1 is implemented by the E2E model for quick responses, receiving sensor inputs and directly outputting driving trajectories to control the vehicle. Concurrently, System 2 is realized by the VLM, which processes sensor inputs, performs logical thinking, and outputs decision information to System 1. Both systems’ capabilities will also be trained and verified in the cloud using the world model.

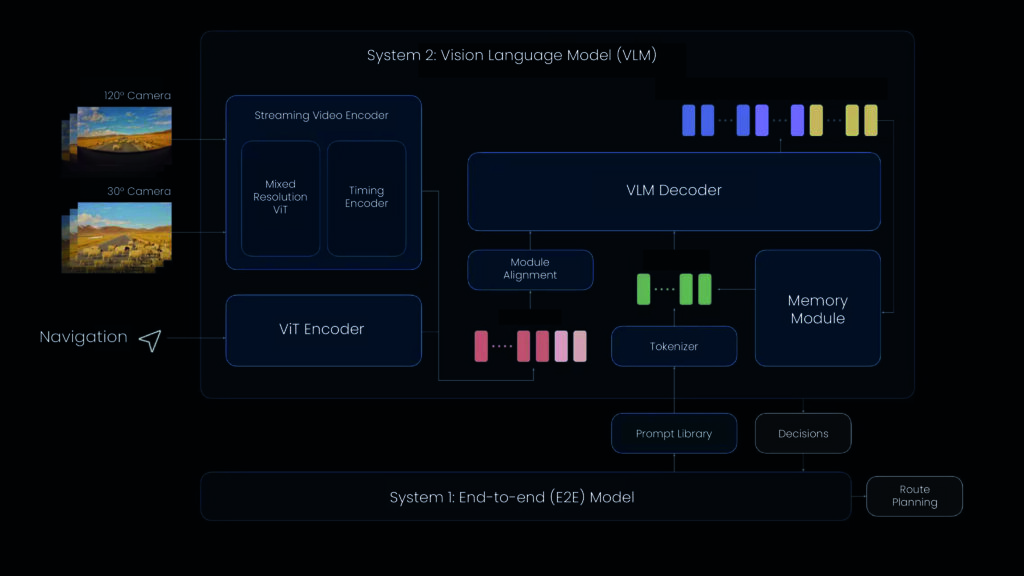

According to Li Auto, the VLM has a strong understanding of the complex traffic environment that characterizes the physical world. It can not only recognize road surface conditions and lighting but also possesses a strong understanding of navigation maps, enabling route correction via the vehicle’s system. It can also comprehend complex traffic rules like bus lanes, tidal lanes (reversible lanes), and time-based restrictions to make reasonable driving decisions.

For example, when encountering a pothole ahead, System 2 will suggest reducing the speed from 40 kilometers per hour to 32 km/h. Xpeng’s LLM, XBrain, is equipped with similar capabilities, enabling the recognition of waiting zones, tidal lanes, special lanes, and textual road signs.

As a visual language model, Li Auto’s VLM has 2.2 billion parameters. Although this is not comparable to the hundreds of billions of parameters in LLMs like the one that powers ChatGPT, Li Auto aims to deploy both the E2E and VLM systems on its vehicle chips.

To achieve this, Zhan Kun, a senior algorithm expert at Li Auto, said that the initial inference time of the VLM on the vehicle side was as long as 4.1 seconds. After continuous optimization, the overall inference performance has been improved 13 times, now taking only 0.3 seconds.

Currently, the only mainstream smart driving chips supporting E2E solutions are Tesla’s HW3.0 chip and Nvidia’s Orin, the latter of which is equipped in Li Auto’s vehicles. However, industry insiders told 36Kr: “For a model with 20 billion parameters, like Li Auto’s, the latency is already at its limit. If larger models are to be deployed in the future, Nvidia’s next-generation chip, Thor, with over 1,000 TOPS in computing power, might be needed.”

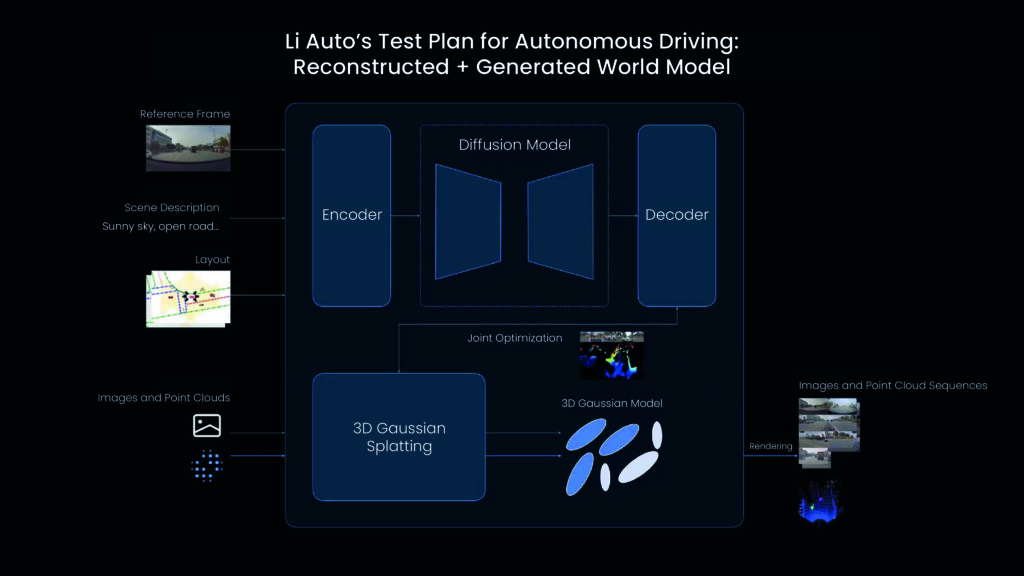

Additionally, Li Auto has introduced its testing and verification methods for the E2E solution. While the industry has mainly used virtual reconstruction and simulation for smart driving testing, the advent of generative AI has made generative simulation a significant trend in the industry.

Li Auto has combined reconstruction and generative simulation to build a world model for testing and verification of its E2E solution. The automaker claimed that the scenarios constructed using this method can create a superior virtual environment for learning and testing autonomous driving system capabilities.

However, Li Auto’s dual solution (E2E and VLM) is not yet ready for user delivery. In July, Li Auto will push the segmented E2E navigate-on-autopilot (NOA) solution to users, which will be utilizable on roads nationwide in China.

Currently, the smart driving industry faces two-pronged challenges concerning business and technology. On one hand, it needs to steadily promote large-scale smart driving experiences to ensure user satisfaction. On the other hand, it must keep up with technological changes like E2E systems.

This requires carmakers to possess strong engineering, implementation, and technical judgment capabilities, ensuring vehicles can offer a superior user experience while continuously advancing their technology. This is a challenge that Li Auto, Huawei, Xpeng, Nio, and numerous other players must confront alike.

KrASIA Connection features translated and adapted content that was originally published by 36Kr. This article was written by Li Anqi for 36Kr.