Charlotte Brunquet is a senior data scientist at Quilt.AI. She has nine years of experience in data analytics and tech, supply chain management, and project management across Europe and Asia, including six years in the FMCG industry with Procter & Gamble.

The following interview was conducted by Amel Rigneau, founder of DigitalMind Media. It has been edited for brevity and clarity.

Amel Rigneau (AR): How did you become a data scientist?

Charlotte Brunquet (CB): After almost six years in supply chain roles with P&G France, I joined Bolloré Logistics to create the Digital innovation department in Singapore. I launched global programs, such as RPA (robotic process automation) to automate manual and repetitive tasks. That’s when I realized that I enjoyed coding and using my technical skills to build business solutions. I took online courses on data science and machine learning and learned to code in Python. I was also looking for a sense of purpose in my job, so when I met the CEO of Quilt.AI, a Singaporean startup working for both commercial companies and NGOs, I decided to leave the supply chain world and joined the startup as a data scientist.

AR: How do you help companies improve their utilization of data?

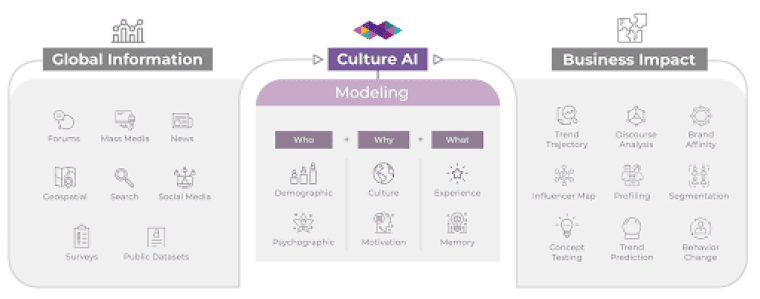

CB: I work with anthropologists and sociologists to understand human culture and behavior. They mostly rely on quantitative surveys and focus groups to gain insights, such as consumer preferences and growth strategies.

Working hand in hand with researchers, our team of engineers created machine learning models that recognize emotions from pictures, identify user profiles based on their bio, or capture highlight moments in videos. When running those algorithms on large data sets pulled from all social media platforms, we can build a comprehensive and empathetic understanding of people.

What excites me the most is to apply these technologies to the nonprofit sector. NGOs want to better understand the needs of the communities they are helping, but also how to support them more efficiently and drive behavior changes. One of the things we’ve done was to help foundations understand the anti-vaccine movement in India, by analyzing Twitter profiles of users spreading such messages and their followers, as well as Google searches and YouTube videos.

AR: We hear a lot about the negative impacts of AI, such as job losses, discrimination, or manipulation. How do you ensure that your technology is used for good?

CB: Technology itself is neutral, it’s never good or bad. It all depends on how it’s being used. Nuclear fission enabled carbon-free energy, but also led to the creation of a weapon of mass destruction. The internet gave access to information to almost everyone around the world, but also led to the creation of the dark web. The same goes for AI. The risk of negative applications doesn’t mean that we have to stop using new technology and deprive ourselves of its benefits.

At Quilt.AI, we follow a couple of ground rules to ensure we prioritize ethics and humanity.

- We never do “behavior change” projects for commercial companies, only for nonprofit organizations.

- We don’t share any individual data and we use publicly available data only.

- Finally, we don’t do any studies on people under the age of 18.

Of course, other organizations around the world could be doing the same but without these priorities in mind. That’s why it’s urgent for governments and institutions to establish rules that regulate AI on a global scale. In April 2019, the EU released a set of ethical guidelines, promoting development, deployment, and use of trustworthy AI technology. The OECD has published principles which emphasize the development of AI that respects human rights and democratic values, ensuring those affected by an AI system “understand the outcome and can challenge it if they disagree.” However, none of those documents provide regulatory mandates, they are just guidelines to shape conversations about the use of AI.

AR: What are the limitations of AI? What role do humans play in this increasingly automated world?

CB: We should not forget is that AI is trained by humans. We are still far away from general artificial intelligence, when machines can replicate the full range of human abilities, and there is no consensus on if and when this will be achieved. What AI lacks is the ability to apply the knowledge gained from one domain to another, as well as common sense.

A study by Cornell University gives us an example: the researchers tricked a neural network algorithm trained to recognize objects in images by introducing an elephant in a living room scene. Previously, the algorithm was able to recognize all objects—chair, coach, television, person, book, handbag, and so on—with high accuracy. However, as soon as the elephant was introduced, it became really confused and misidentified objects that were correctly detected before, even if they were located far away from the elephant in the image. In the field of market research specifically, AI still does not perform well when it comes to understanding context, sarcasm, or irony.

Algorithms help us to identify trends as well as prevailing emotions and topics, but we still need human interpretation to process the full complexity of understanding another human being.

AR: What is something you’ve enjoyed working on in the field of AI?

CB: In my current team, our objective is to build “empathy at scale” by creating algorithms that understand all facets of human personalities and culture without bias. A prerequisite for success is to be inclusive from the start, and that’s where our team of anthropologists comes into play. We recently built an application that identifies people’s cultural style—hipster, corporate, punk, hippie, and so on—instead of the usual gender and ethnicity recognition tools, and people love it.

To read similar stories, please hop on to Oasis, the brainchild of KrASIA.

Disclaimer: This article was written by a community contributor. All content is written by and reflects the personal perspective of the interviewee herself. If you’d like to contribute, you can apply here.

French Tech Singapore is a nonprofit organization gathering French entrepreneurs and locals working in Singapore in the tech industry. La French Tech encompasses all startups, i.e. all growth companies that share a global ambition, at every stage in their development, from embryonic firms to growing startups with several hundred employees and their sights set on the international market. As is the case all over the world, digital technology is a major catalyst for its development, and French Tech represents digital pure players as well as startups in medtech, biotech, cleantech, and other fields.