I woke up startled this morning, opened my computer, and found a Shiba Inu smirking at me from the screen.

Turns out, I forgot to close LivePortrait before going to bed last night.

Yes, I’m talking about that facial expression transfer project that Kuaishou open-sourced during the World Artificial Intelligence Conference (WAIC). For those who missed it, let’s do a quick review.

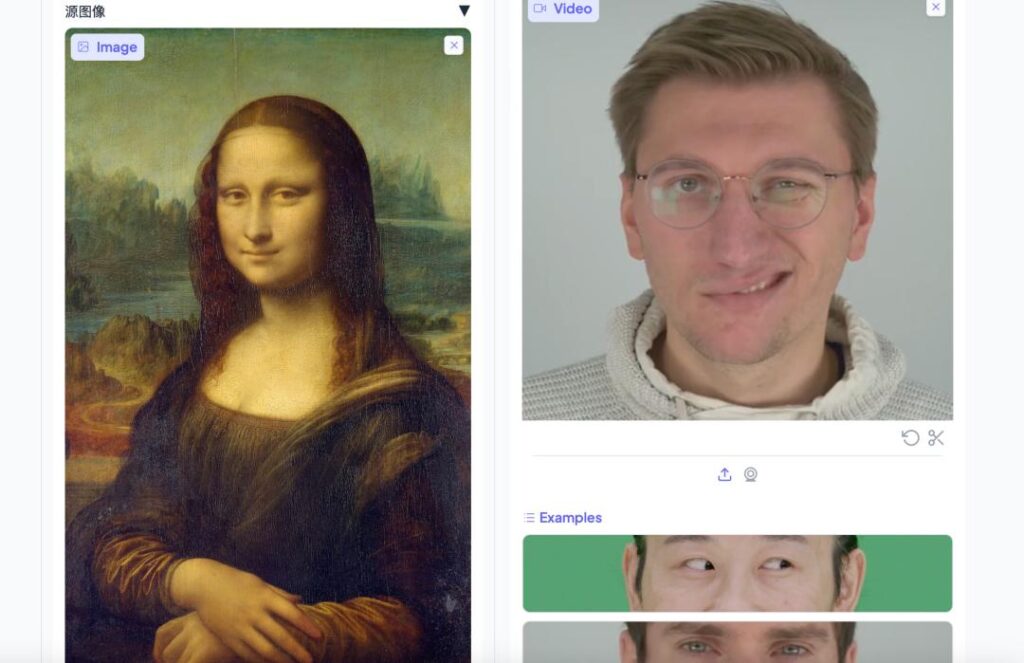

Initially, LivePortrait’s main function was to transfer facial expressions onto portraits. For instance, even if you’ve never been to the Louvre, you can still make the Mona Lisa smirk at you, like this:

Doesn’t it look natural? It’s possible that even Da Vinci didn’t see her expressions as vividly as we do now.

Creating a short video like this is incredibly simple. Just drag the original photo into the left box on the screen and the expression video you want into the right box. With one click, you get a smooth transfer.

But if it were just about transferring expressions, there wouldn’t be much to talk about. From the famous Sora to newer players like Dream Machine and Viggle, any mature large artificial intelligence model can do similar things with ease.

What makes LivePortrait stand out is its ability to transfer not just expressions but actions as well:

With this tool, it seems you no longer need to wait for your favorite star to shoot a scene. Just input their photo, tweak it a bit, and you can create your own content… Just kidding.

Today, LivePortrait has received a major upgrade: it can now transfer human expressions onto animals, just like the Shiba Inu I mentioned earlier.

Since its open-source release in July, LivePortrait has attracted widespread attention from AI enthusiasts worldwide. According to the latest data, it has garnered at least 10,000 stars and 1,000 forks on GitHub.

Back in June, while browsing on Bilibili, we stumbled upon a friend who hinted that LivePortrait would be further optimized. Little did we expect that it would come sooner and be more impressive than imagined.

I took a closer look at the corresponding paper, “LivePortrait: Efficient Portrait Animation with Stitching and Retargeting Control.”

In simple terms, unlike mainstream diffusion models, LivePortrait employs an implicit keypoint framework, focusing on three aspects: generalization, controllability, and practical efficiency—which explains why the upgraded version can seamlessly transfer expression features to animals.

By enhancing model computational efficiency and controllability, LivePortrait improves generation capabilities through the use of 69 million high-quality training frames, mixed video-image training, network structure upgrades, and improved motion modeling and optimization.

Experiments show that, using PyTorch on an RTX 4090 GPU, LivePortrait’s generation speed can reach 12.8 milliseconds, and with further optimizations like TensorRT, it’s expected to drop below 10 milliseconds.

However, during our testing, we noticed a downside: if the original image’s facial features—whether human or animal—aren’t clear enough, or if the angle isn’t frontal enough, glitches can occur, like facial skin staying static while facial features go haywire.

For example, take a look at this cat, twitching all over:

As a large model for generating videos from images, LivePortrait has inevitably been compared to Sora since its debut. It’s hard to definitively say which one is superior on the technical front, but based on current trial results, LivePortrait, developed jointly by Kuaishou, the University of Science and Technology of China (USTC), and Fudan University, indeed offers a more user-friendly experience tailored to Chinese users.

Moreover, the generation speed is also more ideal. It takes only 5–6 minutes to create adorable cat and dog videos, which is hard to resist.

We tried “reviving” a photo of a cat we used to have, and the moment it started shaking its head again, our eyes couldn’t help but well up. Suddenly, we understood the obsession of Tu Hengyu, the character played by Andy Lau in The Wandering Earth 2, with his digital daughter.

While it’s not the same thing, the feeling is quite similar.

Technology, of course, can have warmth. On a larger scale, as more video generation models mature, interactions that transcend time and space might soon become the norm, playing a bigger role in recording our emotions, preserving oral history, and recreating historical scenes.

Besides Kuaishou, other Chinese large models for video generation include Follow-Your-Click (a joint project between Tencent, Tsinghua University, and Hong Kong University of Science and Technology), ByteDance’s Dreamina, Zhipu AI’s Qingying, AIsphere’s PixVerse V2, and SenseTime’s Vimi, among others.

Who will become China’s Sora? The dust hasn’t settled yet. For now, each of the above products still has varying degrees of generation flaws, with some distance yet to be covered before catching up to Sora.

Meanwhile, during the Ghost Festival period, why not let the one you miss—whether it’s him, her, or it—smile at you once more, just like before?

KrASIA Connection features translated and adapted content that was originally published by 36Kr. This article was written by Chi Meng for 36Kr.