This story originally appeared in Open Source, our weekly newsletter on emerging technology. To get stories like this in your inbox first, subscribe here.

December’s typically a slow month for business, yet things are more bustling than ever at Google. On December 6, the tech giant announced the release of Gemini, its latest artificial intelligence model.

According to Google, Gemini is a fundamentally new kind of AI model. It can understand not just text but also images, videos, and audio. The company also said Gemini is its most powerful model to date, claiming it outperforms OpenAI’s GPT-4 on 30 out of 32 standard performance measures.

As a multimodal model, Gemini is described as capable of completing complex tasks spanning various fields. Google launched Gemini with demos showing it writing code, explaining math problems, identifying similarities between images, comprehending emojis, and a whole lot more.

Three versions of Gemini will be released progressively:

- Gemini Ultra, the most powerful variant, is tailored for complex tasks like scientific research and drug discovery. While its release is slated for 2024, it promises groundbreaking capabilities.

- Gemini Pro, the general-purpose model, can perform a range of tasks including chatbots, virtual assistants, content generation, and more. It is now accessible to developers and enterprise customers via Google’s Generative AI Studio or Vertex AI in Google Cloud. It has also been plugged into Bard to resolve prompts that require advanced reasoning, planning, and understanding.

- Gemini Nano, the most efficient version, is designed for on-device tasks, bringing AI capabilities natively to Android devices. It has been baked into the Pixel 8 Pro to process tasks like information summarization.

Why the buzz?

With GPT-4 having hitherto been considered the industry’s gold standard, Google’s assertion of Gemini outmatching it has naturally drawn widespread attention and scrutiny. A claim from Google isn’t likely to be unfounded, with curiosities therefore centering on the next big question: better, but by how much?

As it turns out, not much.

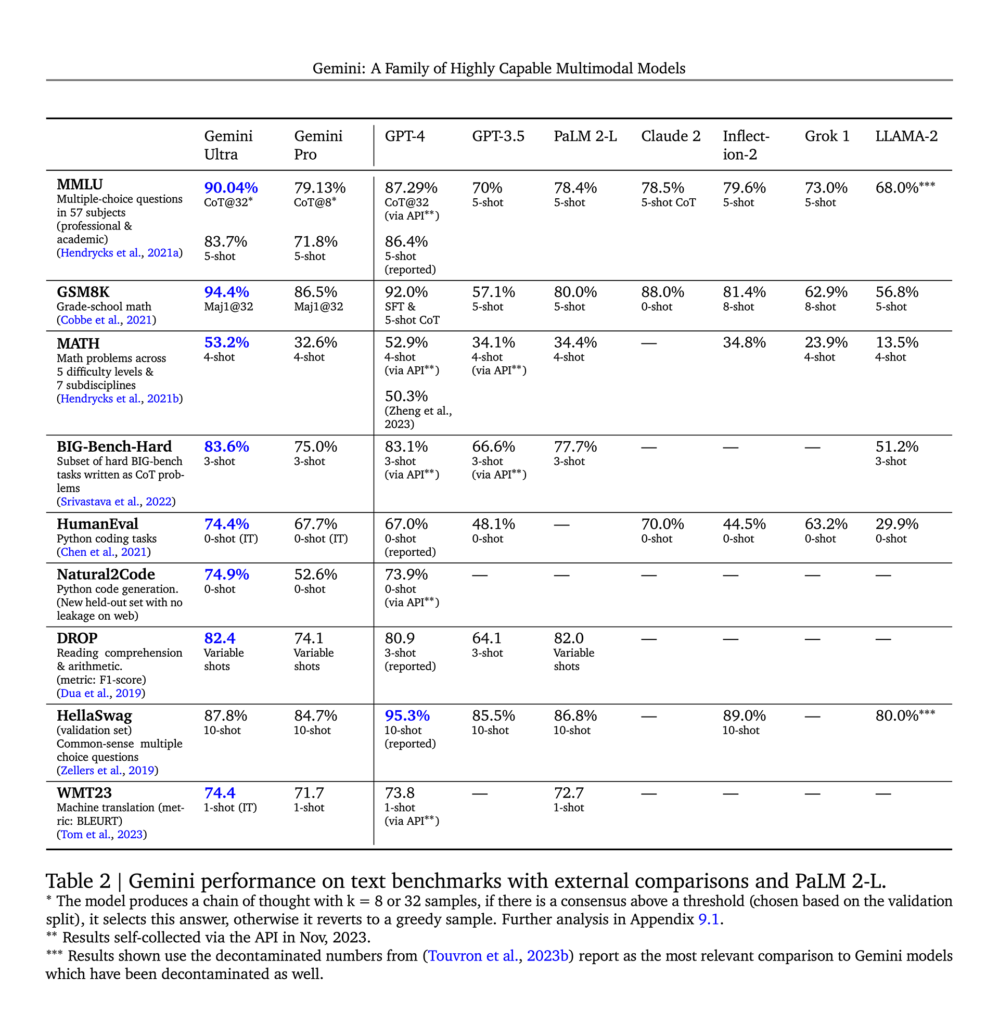

According to results published by Google DeepMind, Gemini outperformed GPT-4 on the majority of measures (30 out of 32), although the differences are marginal. These results stem from tests evaluating the models’ performance across various tasks involving text, images, and combinations of these modalities:

- For text-only questions, Gemini Ultra scored 90% while GPT-4 scored 87.3% on the massive multitask language understanding (MMLU) benchmark.

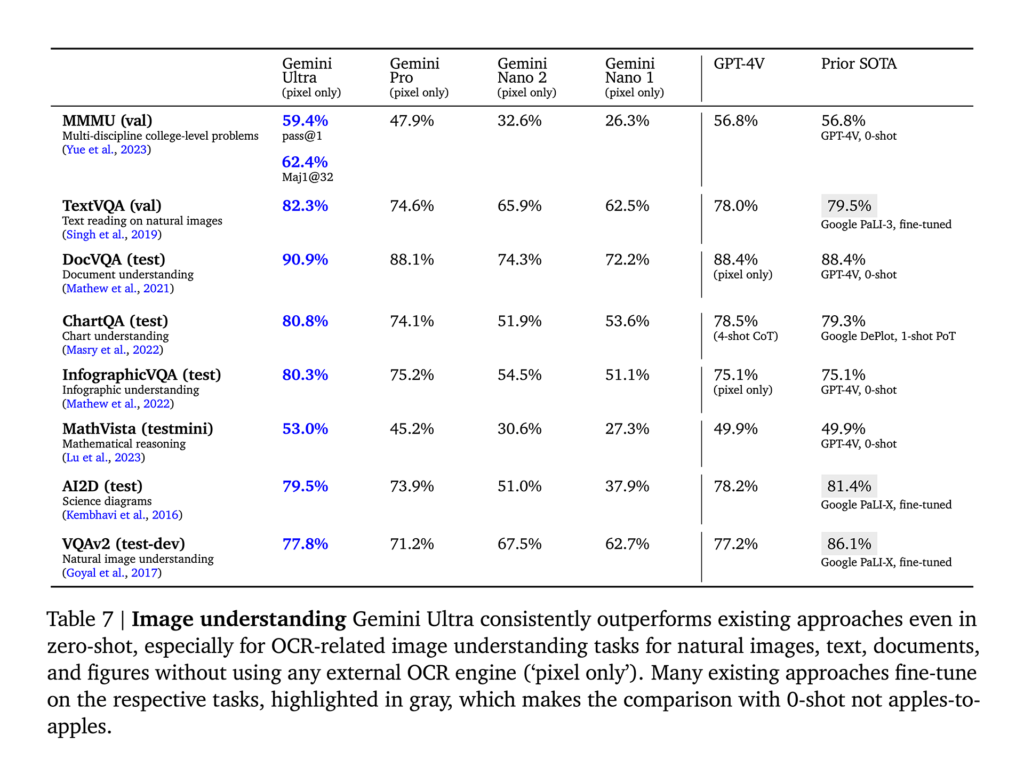

- For multimodal tasks, Gemini Ultra scored 59.4%, slightly higher than GPT-4’s 56.8% on the massive multi-discipline multimodal understanding (MMMU) benchmark.

Despite the slim margins, Gemini hardly warrants a scathing review. Considering Google’s late entry into the AI race, the tech giant has made significant strides to catch up, if not surpass, one of its most serious competitors in the AI space.

If anything, Google deserves more criticism for the way it produced its demo video for Gemini—reducing its latency, truncating model outputs for brevity, and using audio overlays to (misleadingly) convey Gemini as an all-purpose, powerful AI capable of real-time responses to user inquiries. It’s good, but not that good yet.

The big picture

Unless you’re a specialist in AI or a related field, the results of benchmark tests may appear humdrum, offering little insight beyond identifying which models excel at specific tasks.

That’s perfectly fine, because benchmarks are just benchmarks. Ultimately, the true test of Gemini’s capability (and any other AI model, for that matter) should derive from everyday users, like you, who may utilize it to brainstorm ideas, search for information, write code, and more.

In line with this perspective, Gemini is pretty cool, but it’s not revolutionary, at least not yet.

Gemini holds an advantage over GPT-4 due to its access to information from its widely-used search engine, in addition to what it can gather from the internet. OpenAI primarily works with the latter. Moreover, if SemiAnalysis’s claims prove accurate, Gemini can tap into significantly more computing power than GPT-4, courtesy of Google’s ease of access to top-tier chips. The superior results of Gemini in the MMLU/MMMU benchmarks become even less surprising when viewed against this backdrop.

There are bigger and more pertinent questions which need answering, often concerning more significant aspects of AI models. For example, is Gemini less prone, or better, immune to hallucinating and making things up as most large language models (LLMs) currently do?

While Google is eager to impress the public with Gemini, even the company is quick to temper expectations. Demis Hassabis, co-founder and CEO of Google DeepMind, told Wired that “to deliver AI systems that can understand the world in ways that today’s chatbots can’t, LLMs will need to be combined with other AI techniques.”

Hassabis is probably correct. What he was alluding to is the idea of artificial general intelligence, or AGI for short. This is something that the major players are clearly cognizant of and actively working toward developing. Rumors are even swirling around that OpenAI has made a breakthrough in this regard—a project that has since been referred to as the Q* (Q-star).

As more AI companies enter the fray and introduce newer LLMs and related technology, achieving AGI seems inevitable albeit likely on a timeline too lengthy for the liking of the enthusiasts and innovators.

Google, initially complacent, entered the AI race after OpenAI introduced ChatGPT to much acclaim. While its response—first with Bard and now Gemini—may not be groundbreaking, it exemplifies how latecomers can narrow gaps through concerted effort. If anything, this should serve as a significant revelation and the biggest takeaway for other AI enterprises seeking to catch up with the heavyweights.

If you enjoyed reading this, you might also be interested in:

- Baidu claims its latest AI model Ernie 4.0 is on par with OpenAI’s GPT-4 (by KrASIA Connection)

- Huawei announces “all intelligence” strategy to promote deep AI integration across industries (by KrASIA Connection)

- The multifaceted applications of SenseTime’s AI algorithms (by KrASIA Connection)